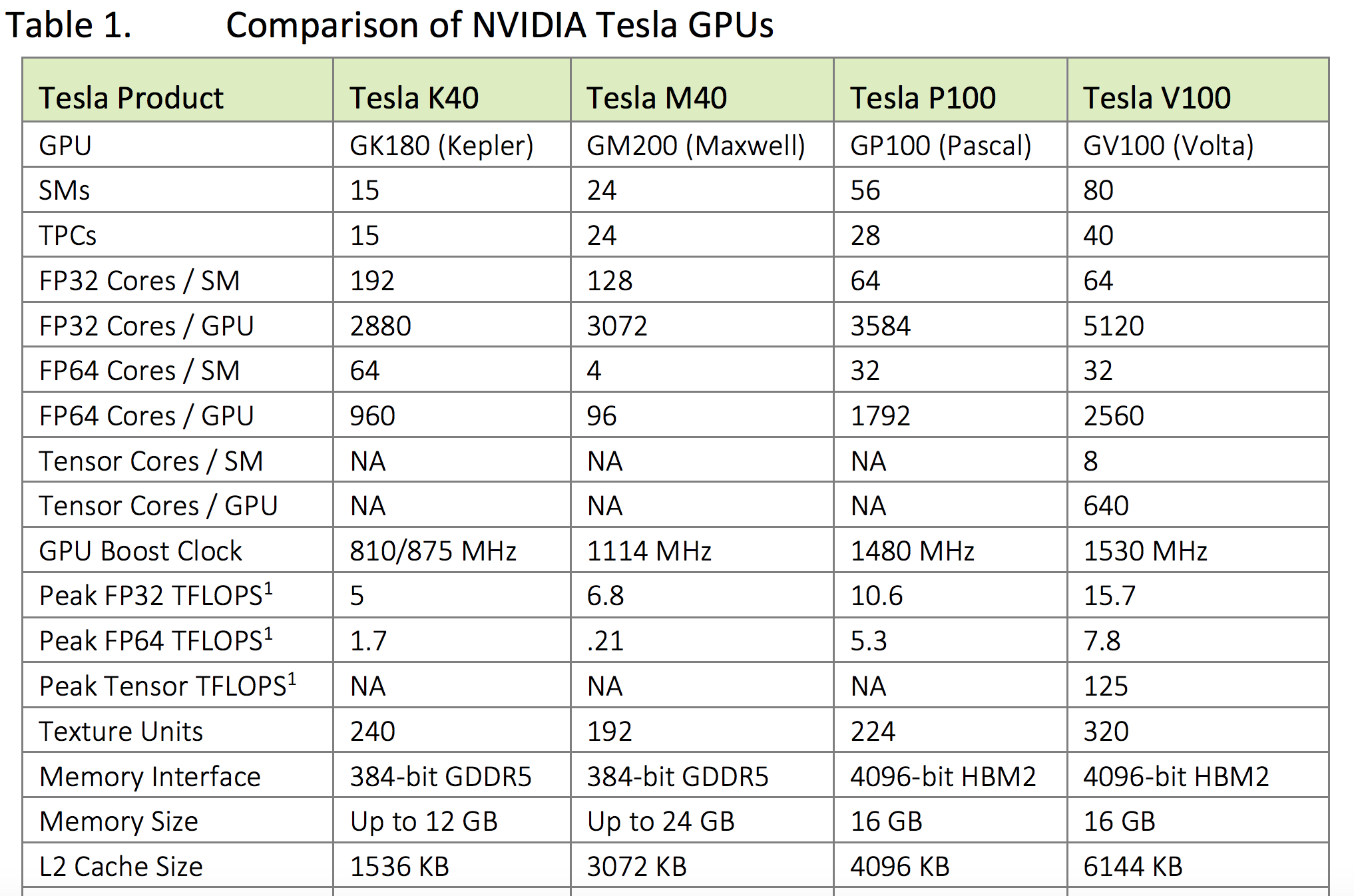

Now only Tesla V100 and Titan V have tensor cores. Both GPUs have 5120 cuda cores where each core can perform up to 1 single precision multiply-accumulate operation (e.g. in fp32: x += y * z) per 1 GPU clock (e.g. Tesla V100 PCIe frequency is 1.38Gz).

Each tensor core perform operations on small matrices with size 4x4. Each tensor core can perform 1 matrix multiply-accumulate operation per 1 GPU clock. It multiplies two fp16 matrices 4x4 and adds the multiplication product fp32 matrix (size: 4x4) to accumulator (that is also fp32 4x4 matrix).

It is called mixed precision because input matrices are fp16 but multiplication result and accumulator are fp32 matrices.

Probably, the proper name would be just 4x4 matrix cores however NVIDIA marketing team decided to use "tensor cores".

也就是这个意思

简单来说cuda core一个时钟周期可以进行一次*和+

一个 tensor cores 一个时钟周期 可以把两个4x4的矩阵进行*和+

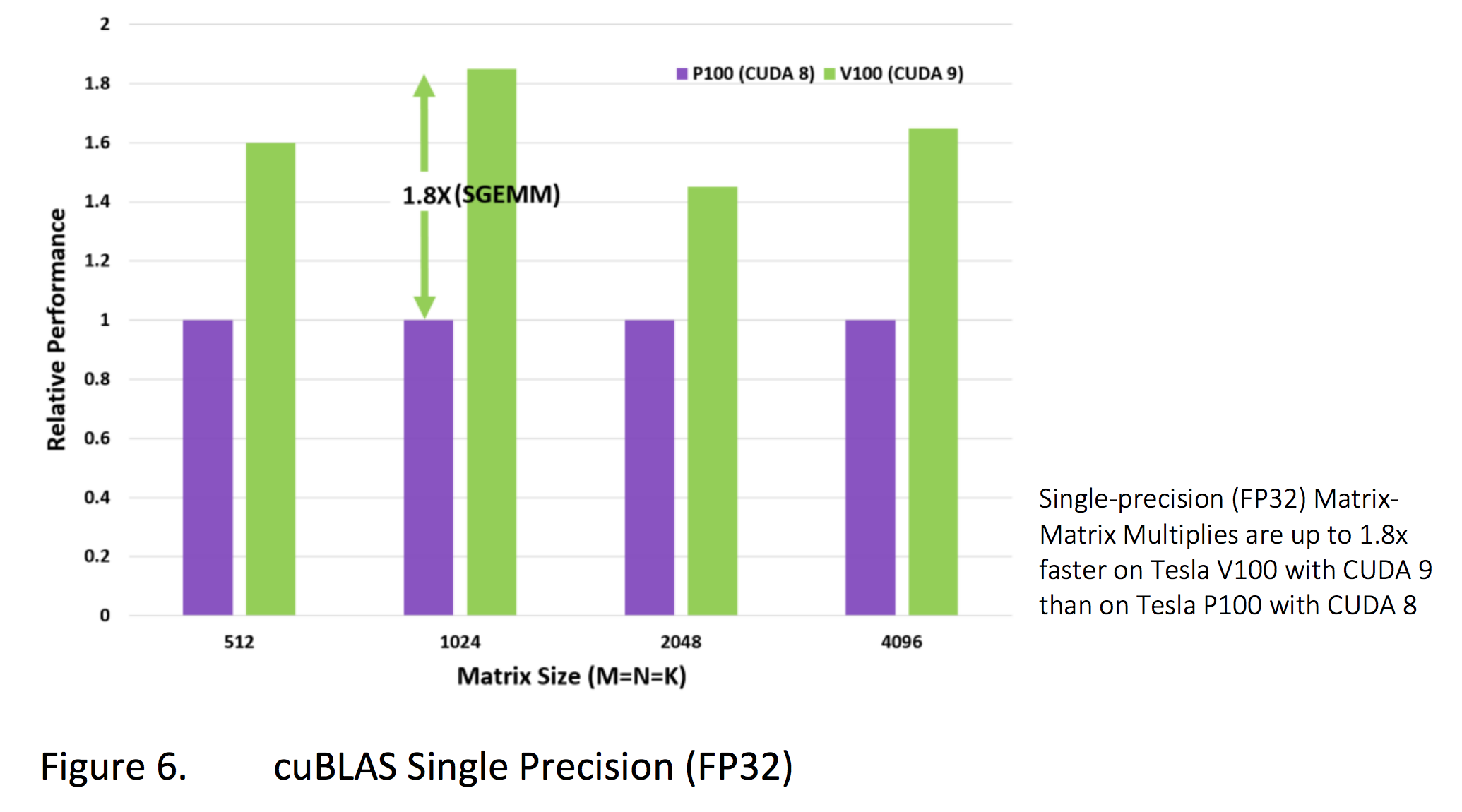

可以看看它和以前的显卡的对比就知道了

看到没,就是一个是一个单元一个单元做的 另一个是一片一片做的

官网上这么说:

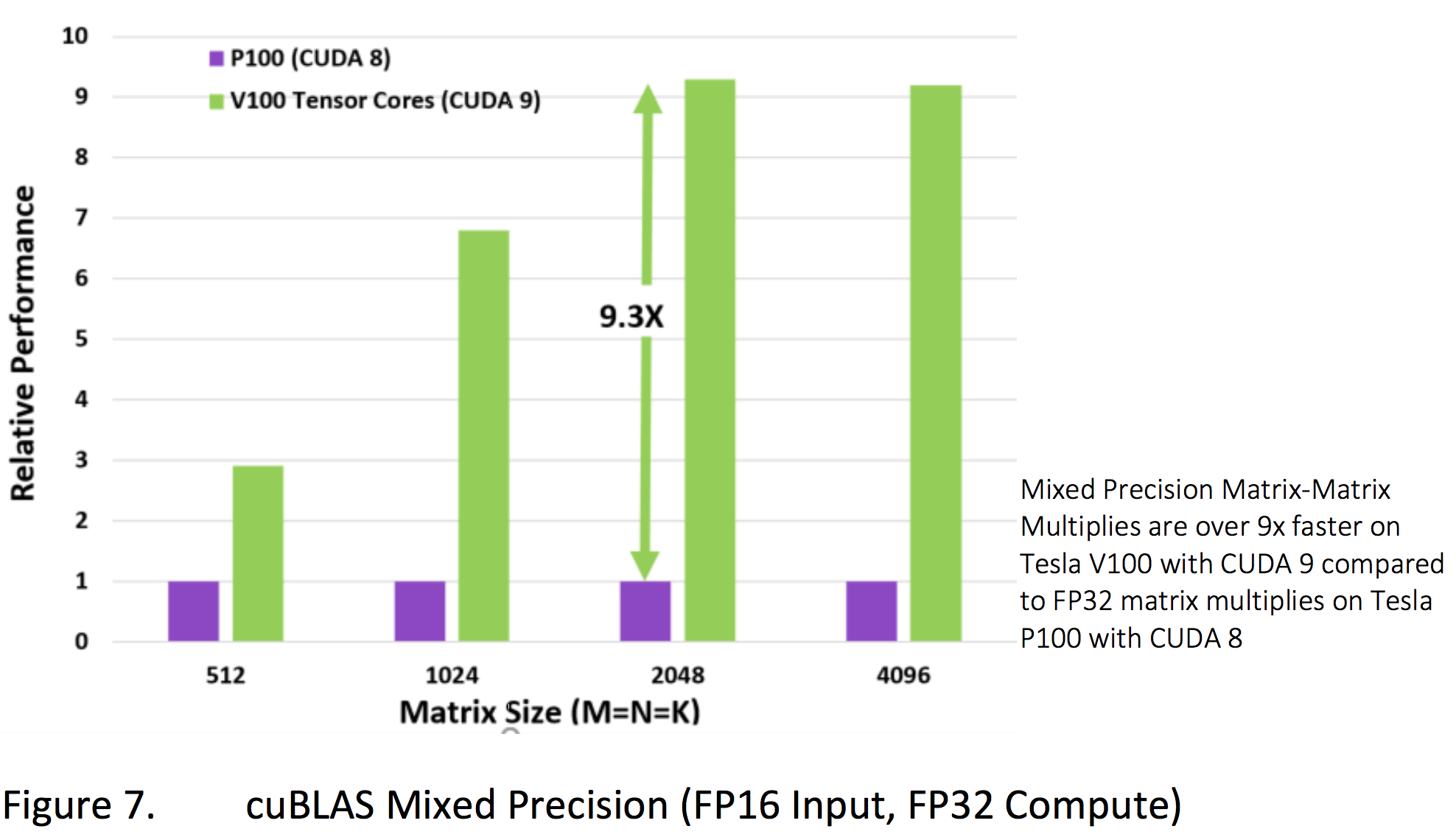

Volta is equipped with 640 Tensor Cores, each performing 64 floating-point fused-multiply-add (FMA) operations per clock. That delivers up to 125 TFLOPS for training and inference applications. This means that developers can run deep learning training using a mixed precision of FP16 compute with FP32 accumulate, achieving both a 3X speedup over the previous generation and convergence to a network’s expected accuracy levels.

最后还有一个这样的总结:

回复列表:

god发表于 Jan. 22, 2019, 11:16 p.m.

又看了一下,好像v100的tensor cores 不支持int8 和int4

god发表于 Jan. 22, 2019, 10:39 p.m.

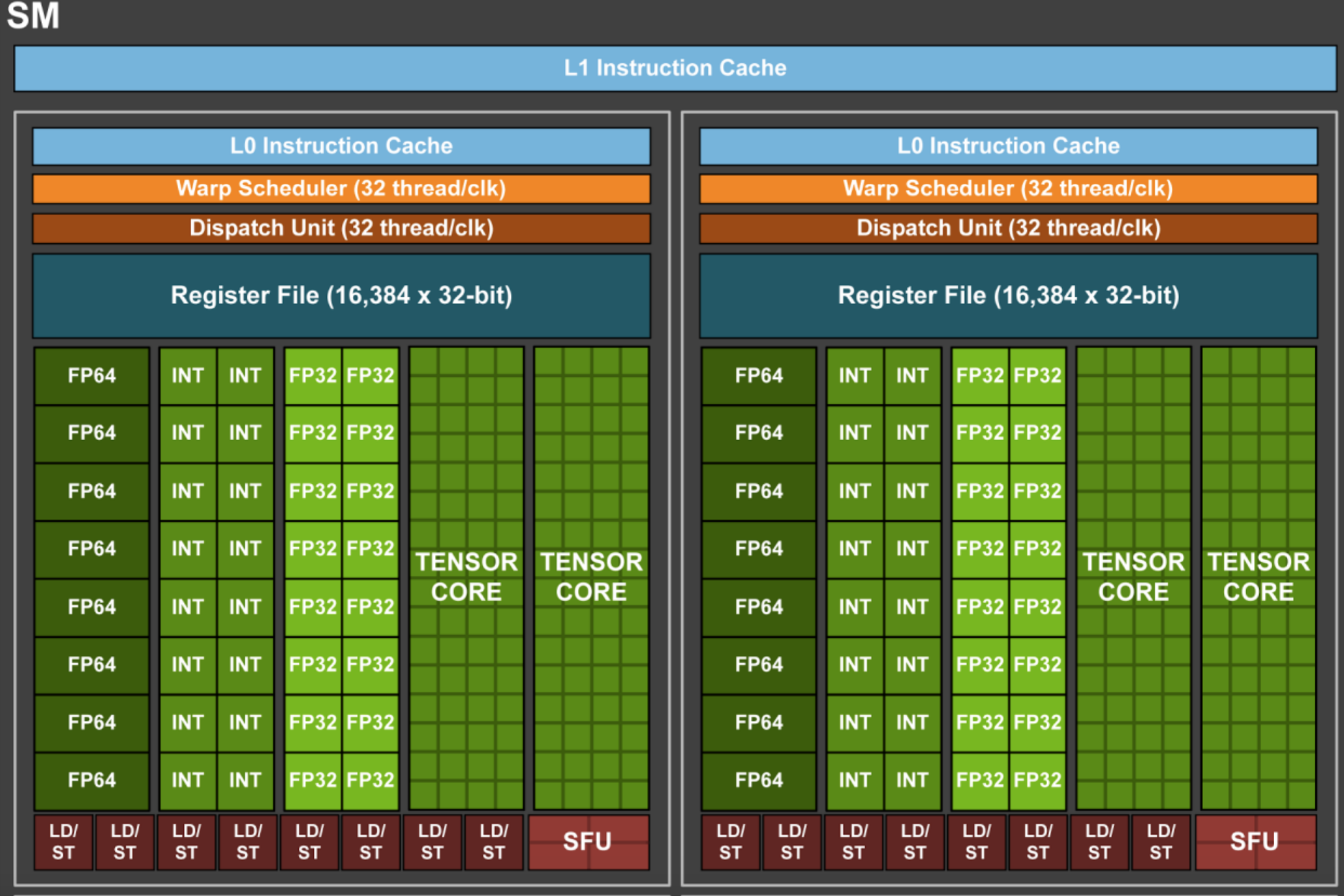

这个图片可以看出来新的卡里面tensor cores确实占了很大的地方